BoardDocs Data Exposure: When 'Intended Functionality' Becomes a Vulnerability

It all started when I began looking into Omnia Partners, a large cooperative purchasing organization. While reviewing their online presence, I noticed an unusual amount of Personally Identifiable Information (PII) and Controlled Unclassified Information (CUI) publicly accessible. While Omnia Partners deserves its own write-up, the real rabbit hole began when I discovered a document that wasn’t hosted on their site at all. It was on a platform called BoardDocs, owned by Diligent Corporation. BoardDocs is widely used by school boards, municipalities, and government agencies to publish meeting agendas, contracts, and internal documents for public transparency.

That one document set me on a new path. Once I realized it wasn’t an isolated case, I started actively investigating BoardDocs itself. Very quickly, it became clear that there were many documents publicly accessible through predictable URLs, including ones that contained sensitive financial and personal data. What began as a single finding connected to Omnia Partners grew into a much broader issue affecting multiple organizations across the country.

When I shared my findings with my boss, he immediately recognized the scope of the problem. His advice was straightforward — submit it through Bugcrowd so it gets in front of the vendor, and you might get a few dollars for finding it. So, that’s what I did: I documented the exposed URLs, the unauthenticated access, and the presence of sensitive PII, and filed it through their program.

The Initial Report

When I filed the report, I outlined three key issues:

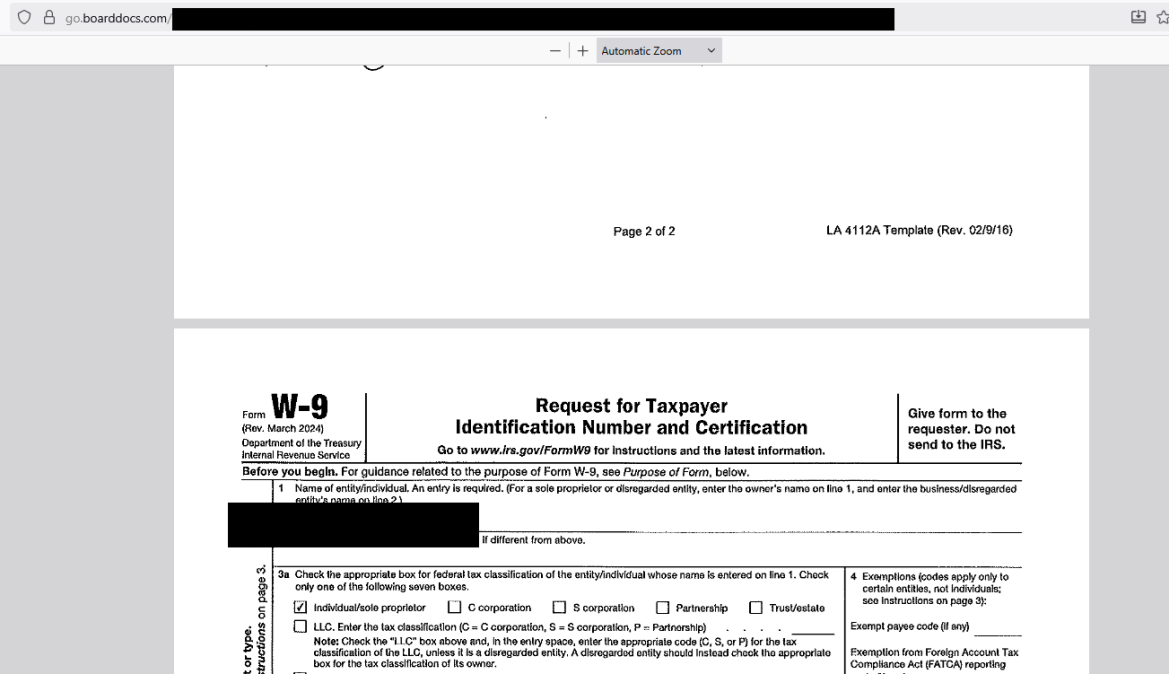

- Certain documents were accessible without any authentication.

- Predictable URLs exposed sensitive files through direct object references.

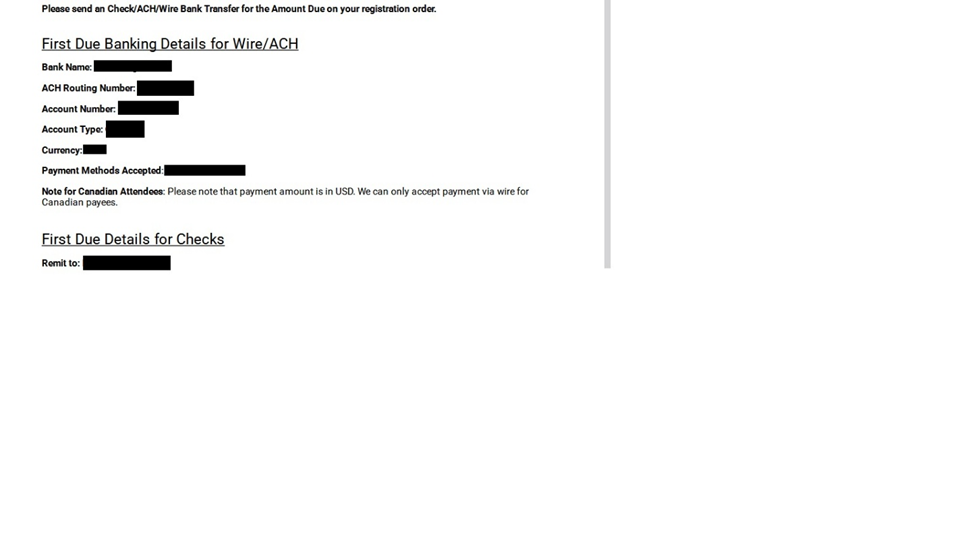

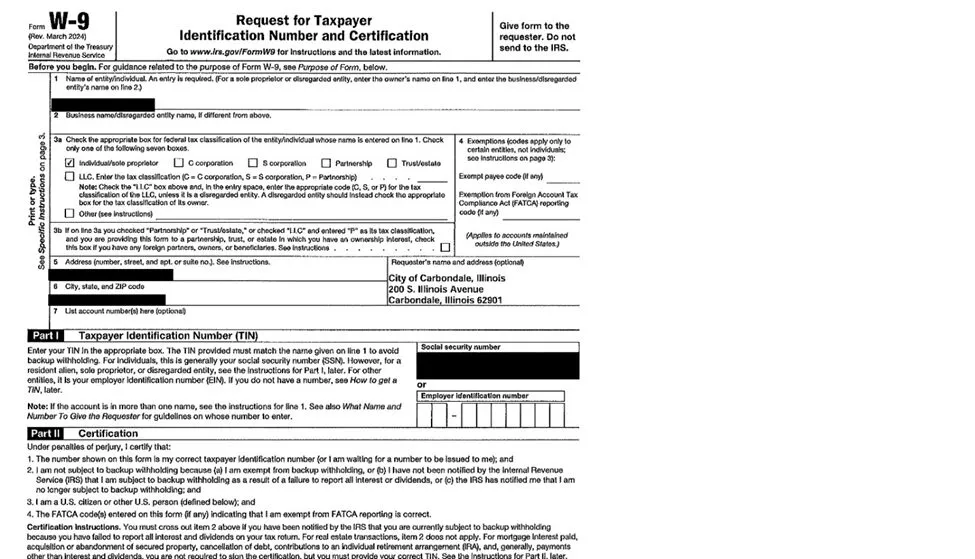

- Some of those files contained PII, including financial records and Social Security Numbers.

Based on these findings, I expected the issue would be classified as an Improper Access Control vulnerability — which OWASP describes as one of the most common yet serious security risks. This is even listed in Bugcrowd’s own Vulnerability Rating Taxonomy as a textbook P1 severity.

The Response

So here is the part that shocked our team. Bugcrowd’s triage team marked the submission as “publicly available information / OSINT” and closed it as “not applicable.” In other words, because the documents could be found online, the exposure wasn’t considered a vulnerability at all.

Let that sink in, there was no acknowledgment of the unauthenticated access path, no recognition that sensitive PII and financial data were involved, and no indication that the issue would be passed along to the vendor for deeper review.

That was it, the case was closed, the report dismissed, and the matter considered resolved.

I was in shock. Thousands of private documents — Social Security Numbers, credit card details, bank account info, background checks — were sitting there for anyone to see. Unsure what else to do, I called BoardDocs’ IT department directly.

The first time, I was told, “We’ll call you back.” They never did.

A few days later, I called again and finally got someone on the phone. I explained what I was seeing. That they were exposing client and customer Social Security Numbers. His response floored me:

“Once it’s posted, we can’t do anything about it.”

He added that if anything were to be done, it would be on me to reach out to each school and let them know.

So, I tried. For several days, I called schools directly, but nobody wanted to talk to me. Eventually, I gave up. This was purely about doing the right thing. I wasn’t doing it for money or recognition — I was trying to protect people. But it felt like no one wanted to listen.

Months Later

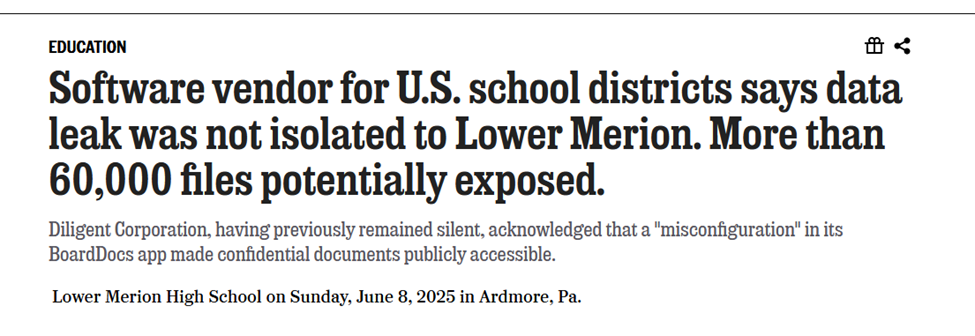

Well, this is awkward… several news outlets began reporting exactly what I had raised months earlier — sensitive government and financial documents exposed online through BoardDocs. Search engines were indexing information that should never have been public.

The 74 revealed that around 64,000 private files across hundreds of school boards were exposed due to a BoardDocs misconfiguration. The Philadelphia Inquirer documented a case in Lower Merion, where “highly sensitive” internal records, legal memos, and employee details were accidentally made public. And Yahoo News highlighted how districts nationwide were unaware that confidential files had been published, with private folder settings failing to provide real protection.

Just as we tried to explain to Bugcrowd originally, it wasn’t just a few stray files. It was a systemic design issue surfacing across multiple organizations. The same risks I’d flagged and been told were “not applicable” were now front-page news.

The Reevaluation

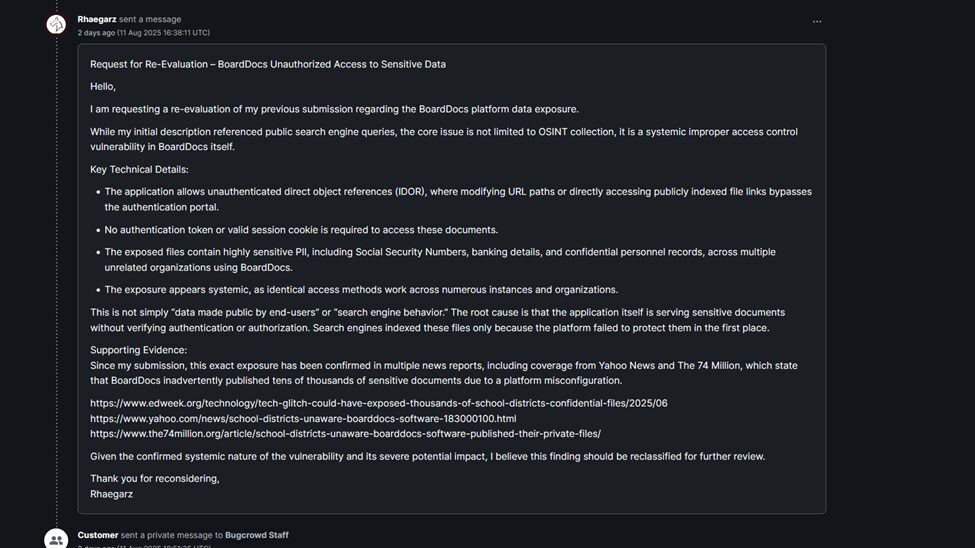

Armed with that confirmation, I went back to Bugcrowd and requested a reevaluation. This time, I stripped it down to the core technical impact — unauthenticated access to sensitive data across multiple organizations.

Bugcrowd escalated the ticket to their operations team, created a blocker, and initiated internal discussions. Then… we waited again.

The Response (Part 2)

The vendor’s official reply finally landed:

“This behavior is consistent with the product’s intended and documented functionality: when a customer chooses to make a document public, it becomes accessible and searchable by engines like Google… Diligent has already deployed a fix for this.”

In other words, since customers had made the documents public, it wasn’t an issue.

Why That Explanation Doesn’t Sit Right

Initially, it seemed like a reasonable response — but the more I thought about it, the more I questioned it.

If something is marked “public”, of course it should be discoverable. But when hundreds of organizations all “accidentally” expose sensitive data in the same way, it stops being a one-off customer mistake and becomes evidence of a design flaw.

If the defaults, warnings, or controls built into the platform consistently lead to high-impact misconfigurations, then the platform itself is complicit. And the fact that a fix had already been deployed was, in its own way, an acknowledgment that the original design wasn’t safe enough.

Calling this “intended functionality” doesn’t erase the fact that credit card and SSN data were all over Google.

Lessons Learned

- For organizations using BoardDocs/Diligent: Audit your “public” documents — don’t assume the platform is protecting you.

- For security researchers: Even when your initial report is dismissed, your instincts may still be right. Stay persistent.

- For vendors: “Working as intended” shouldn’t end the conversation — if the intended behavior consistently leads to sensitive data exposure, it needs to be redesigned.

Closing Thoughts

Ultimately, my report was closed as “intended functionality.” Not a big deal — except I submitted my findings months before the media reports surfaced. Had the initial report been taken more seriously, some of that exposure might have been prevented.

This story isn’t about blame, it’s about awareness. Attackers don’t care whether something is called a bug, a feature, or a misconfiguration. If the data’s out there, it’s fair game.

So, whether you’re an organization using these tools or a vendor building them, make “safe by default” your north star because sometimes, intended functionality is exactly what gets people burned.

Ready to Transform Your Business?

Partner with our team of experts to unlock your business’s full potential. Schedule your free consultation and discover how we can help you.