From Domain Fronting to PaaS Redirectors: The Evolution of Covert Communication

In cybersecurity, techniques evolve rapidly as defenders and attackers adapt to the ever-changing landscape. This article will explore an exemplary example of this evolution in transitioning from Domain Fronting to Platform as a Service (PaaS) Redirectors.

The Rise and Fall of Domain Fronting

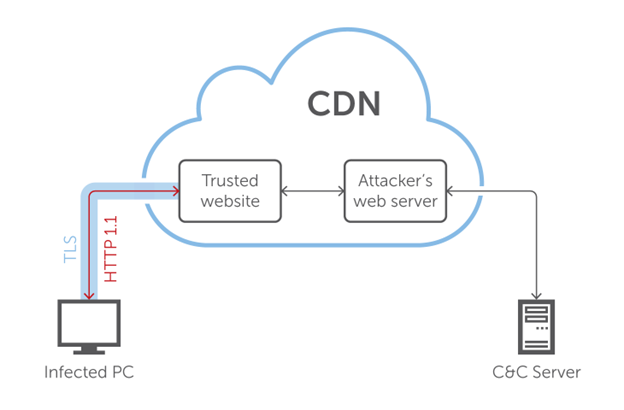

Domain fronting was a popular method used to disguise the actual endpoint of network traffic dating back to 2015 in a research paper from Berkley. It exploited differences between the layers of the internet’s domain name system (DNS) and HTTP/HTTPS protocols to hide the actual destination of a connection.

By setting an HTTP request’s Host header to a benign domain, an attacker could set the DNS request for a different, potentially blocked or monitored domain, circumventing the end site’s censorship. This discrepancy between the DNS and HTTP requests was possible due to the use of shared hosting infrastructures, like content delivery networks (CDNs). Since the initial connection was made to the benign domain, firewalls and other security devices would allow the traffic to pass.

Due to the simplicity of implementation and high success rate, a variety of actors widely used this technique. Privacy-focused tools and services, like Tor and Signal, used it to evade censorship in countries with restrictive internet policies. Meanwhile, cybercriminals and Advanced Persistent Threat (APT) groups leveraged domain fronting to hide their activities and infrastructure.

However, the technique’s effectiveness was curtailed when major providers like Google and Amazon Web Services began to clamp down on domain fronting in 2018, citing abuse of their services. Although it is widely suspected that it was due to pressure from the Russian and Chinese governments. Regardless, with the shutdown of the technique by major hosting providers, domain fronting became largely ineffective and fell out of use.

Platform-as-a-Service (PaaS) Redirectors: The New Kid on the Block

In the wake of domain fronting’s demise, a new technique has come to the fore: PaaS Redirecting.

PaaS redirectors leverage the infrastructure of PaaS providers to disguise the destination of network traffic. Instead of manipulating the DNS and HTTP headers, PaaS redirectors employ various services provided by PaaS providers to reroute traffic. This can be as simple as a web app hosted on the PaaS that forwards traffic to the actual destination or as complex as using serverless functions and other cloud-native services to process and redirect traffic.

One of the primary advantages of PaaS redirectors is that they can leverage the reputation of the PaaS provider to evade detection. Most PaaS providers have many legitimate customers, and blocking all traffic from a PaaS provider would also block those legitimate services. This makes it much more difficult for defenders to block traffic from PaaS redirectors without also blocking legitimate traffic. It also removes some of the difficulty in obtaining seasoned domains to be used in our infrastructure. As a bonus, the PaaS provider also handles maintaining the TLS certs for us.

Building a PaaS Redirector

Building a PaaS redirector involves setting up services on a PaaS provider that can receive and redirect traffic. While the specific steps can vary based on the PaaS provider and the desired configuration, the general process involves the following steps:

First select a PaaS provider. The choice of provider can depend on a variety of factors, such as cost, ease of use, and the specific services offered. Some popular PaaS providers include Amazon Web Services (AWS), Google Cloud, and Microsoft Azure. Just be careful and don’t forget to turn off your AWS instances.

Building a Serverless Function

The core of a PaaS redirector is a web app or serverless function that receives and forwards network traffic. This can be a simple web server that forwards all incoming requests to another server or a more complex app that processes the incoming requests in some way before forwarding them. Theoretically, these could be as complex as data transforms to allow for the PaaS director to be used with multiple C2s simultaneously. Although we tend to stick with one and have it mostly forward traffic.

When setting up the serverless function, it’s important to configure it to forward traffic to the desired destination. This can often be done by setting an environment variable or configuration option with the URL of the destination server. One of the nice things about this technique is that we can use the default URL provided by the VPS provider, as the target won’t see the address, so we don’t have to worry about it looking odd.

For our example, we will build a Cloudflare Worker, which allows you to write JavaScript that runs on Cloudflare’s edge network. In the code below, we set up a Cloudflare Worker that listens for incoming fetch events. When a request comes in, we create a new URL with the same path and query parameters as the original request, but with the host and protocol of our target URL. We then create a new Request object with this URL and the original request’s method, headers, and body, and forward this request to the target URL.

addEventListener("fetch", event => {

event.respondWith(handleRequest(event.request));

});

const TARGET_URL = "https://example.com";

async function handleRequest(request) {

let url = new URL(request.url);

url.protocol = TARGET_URL.split(':')[0];

url.host = TARGET_URL.split('//')[1];

let newRequest = new Request(url, {

method: request.method,

headers: request.headers,

body: request.body,

});

return await fetch(newRequest);

}This is a very basic example of using a Cloudflare Worker as a PaaS Redirector and is missing some key components like error handling and complex routing rules. But it can be extended by expanding the handleRequest function.

Deploying Your Worker

To deploy this worker, you would use Cloudflare’s web interface or the CLI tool Wrangler. Create a Cloudflare account, install Wrangler, and then use Wrangler to deploy the worker. After installing Wrangler, create a new project:

wrangler generate my-worker

cd my-worker

# replace index.js with your worker code and update wrangler.toml

wrangler publish --env productionThe above will create a directory named my-worker with a default index.js and a wrangler.toml file. Replace the default file with your worker code, update the configuration, then publish — and you’re off to the races.

The Future of Covert Communication

As we move forward, it’s clear the cat-and-mouse game of covert communication will continue. Techniques like domain fronting and PaaS redirectors will rise and fall as defenders adapt and attackers innovate.

However, the need for robust, flexible defenses remains constant. Defenders must keep pace as attackers leverage the complexity and scale of modern internet infrastructure. That means deep understanding of attacker techniques and the ability to adapt as new techniques emerge.

Ready to Transform Your Business?

Partner with our team of experts to unlock your business’s full potential. Schedule your free consultation and discover how we can help you.