Survivorship Bias in Threat Emulation: What We Don’t See

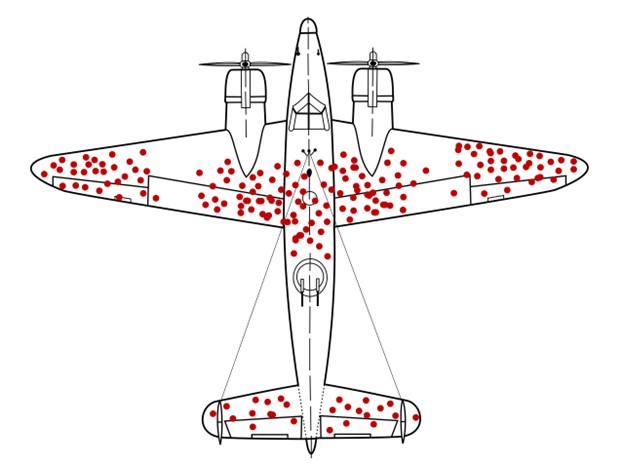

If you have spent any time on our Discord, you have almost certainly seen discussions about the prevalence of PowerShell and how it’s still used in most modern attacks. After all, we are still big fans of it in threat emulation and still publish research on it. Inevitably though, someone will break out this image.

If you aren’t familiar with it, it’s the damage distribution of bombers that returned home during World War II. If you were discussing where armor should be added to the aircraft, the initial inclination would be to add it to the most often damaged areas. However, this is the distribution of damage on planes that still made it home. Conversely, the aircraft damaged in the areas with almost no damage did not. That initial tendency to focus only on the damage that returned—or a group that has gone through some selection process, not those that didn’t—is known as Survivorship Bias.

Survivorship Bias and Cyber Reporting

How does this relate to the fact that we see PowerShell being reported on constantly in attacks? Well, reporting is, by nature, only about the threat actors that have been caught. What about all the ones that didn’t get caught? There’s no way to examine that because we don’t know what they did. Therein lies the problem of threat emulation. But it also means they were likely doing something different than those that did get caught.

The Open-Source Advantage

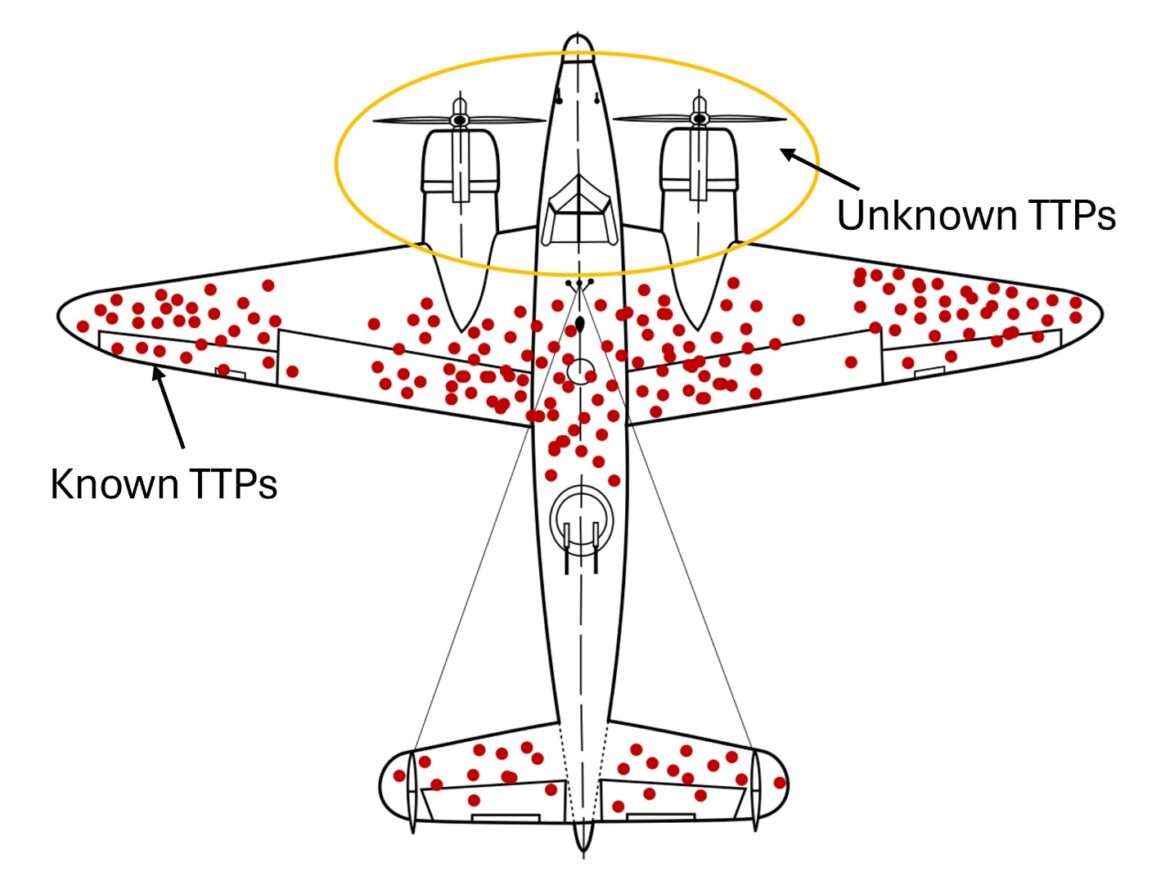

We’re going to crack open Pandora’s box for a second here. This fact is one of the main points that advocates of open-source tooling focus on. Without people bringing unknown TTPs to light, adversaries would be free to continue using them undetected. Exposing them allows defenders to identify Indicators of Compromise (IOCs) that can be used to improve monitoring.

Back to the discussion, though, if we know that reporting is biased toward only those adversaries we currently know how to detect, how should we ensure that we represent valid customer threats? We should consider a few things when answering that question.

1. Not All Adversaries Care About Detection

A large class of threat actors don’t care about being caught and don’t change TTPs simply because their tooling was burned once. For example, ransomware groups evolve more on development timelines than because they got caught. We see continual use of ransomware strains even after mature organizations detect them.

They target low-hanging fruit, and being caught doesn’t stop them from hitting unprepared organizations. For many customers—especially those in less mature environments—simply replicating known, reported threats is enough. Which brings us to the second point.

2. The Intelligence Gap

Every organization has a different configuration and level of maturity. Just because Unit 42 reported detecting a threat doesn’t mean that security products or organizations industry-wide are detecting it.

This is what I (very unoriginally) refer to as the intelligence gap. When new threats are reported, they almost always come from a single source, and other reporting lags behind. In a few months—or even a year—many products will integrate updated signatures and detections. But this leaves a significant time gap, where red teams can be more agile than the defense industry.

We can start integrating newly identified TTPs to give organizations a better picture of how much risk they accept while waiting for their defensive products to be updated. It may also be the case that some products already detect the new TTPs without needing to change anything.

3. Staying Current with Open Reporting

This is why it’s important to stay up to date with open-source reporting. It allows us not only to integrate new TTPs but also to spot trends — for example, threat actors shifting from C# to Rust, or redeploying older PowerShell loaders.

Those trends help us give customers the most representative threat to train and test against — which brings me to the final point.

What Does “Emulation” Really Mean?

This might seem like a silly question, especially if you’ve heard my soapbox speech about the lack of rigor applied to emulation vs. simulation. Under strict definitions:

- Emulation reproduces the exact behavior of something (running the same commands a known threat did).

- Simulation only reproduces the outcome (e.g., data exfiltration, domain takeover).

MITRE follows the stricter definition, and Cat Self and Kate Espir gave an excellent talk at Black Hat USA 2023 on how they identify reports with sufficient technical data to build real emulations. You can find their slides here.

There’s a lot of value in this approach — especially for creating objective, data-driven evaluations for software products. It allows direct comparisons between tools under identical conditions. However, it’s missing one key factor for red team emulation: humans.

Dynamic Adversaries Require Dynamic Red Teams

Modern compromises include hands-on-keyboard operators who are themselves dynamic. Whenever this comes up, I’m reminded of a quote I heard while working a Red Flag exercise at Nellis:

“Adversaries are dynamic, and those that train against static threats are destined to fail.”

Always using the same playbook trains defenders to counter only specific scenarios. This can happen between internal red and blue teams — since they face the same group repeatedly, their detections become tuned to that team’s behavior rather than real-world threats.

So, when conducting threat emulation, red teams must stay dynamic. They should mix in TTPs that align with the threat’s sophistication level but aren’t limited to those explicitly reported. In other words, they should not be constrained by the survivorship bias of reporting.

Reporting is valuable for identifying trends and common tooling, but red teams must explore beyond what’s visible — not just the tools that have already been burned.

Avoiding the Opposite Extreme

This doesn’t mean a red team emulating a .NET-heavy threat should suddenly pivot to using all-C tooling. That’s the opposite extreme — chasing shiny, undetectable tools that don’t align with the adversary’s real capabilities.

The goal is balance — representing real-world adversaries, but with enough flexibility to challenge defenders and surface unseen weaknesses.

The Iceberg Analogy

Let me close with this: red teaming is much like an iceberg. As with identified TTPs, the iceberg reveals only a fraction of its true size above the surface. Relying solely on reported threats exposes only a small portion of the potential risks.

Embracing the dynamic nature of adversaries means diving beneath the surface to uncover the unseen threats that lurk below. However, the iceberg is still one connected piece — we must stay within the boundaries of plausible TTPs while recognizing that both known and unknown threats matter.

Ready to Transform Your Business?

Partner with our team of experts to unlock your business’s full potential. Schedule your free consultation and discover how we can help you.